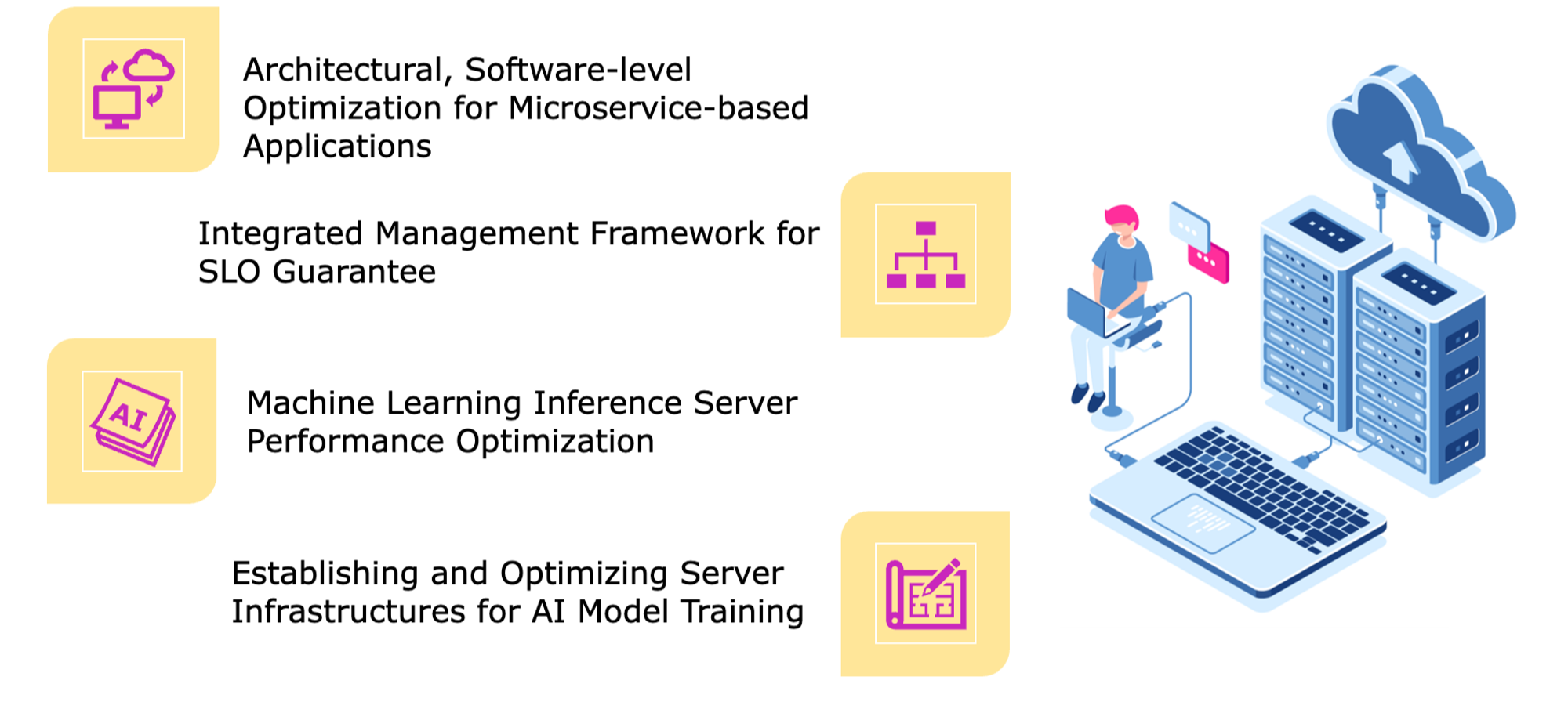

Our research focuses on enhancing the efficiency of cloud-based systems and applications. A key area of interest is the architecture and optimization of microservice-based applications. We are committed to discovering innovative architectural and software-level approaches to improve the performance, scalability, and resource utilization of these applications. Another significant effort is the development of an integrated management framework designed to ensure Service Level Objective (SLO) guarantees, providing a comprehensive solution for maintaining service quality and performance across diverse cloud services. Additionally, we are deeply involved in optimizing the performance of machine learning inference servers. Through careful analysis and design, we aim to maximize their potential, enabling rapid and accurate inferences across various applications. Our research also extends to optimizing server infrastructures for AI model training. By fine-tuning server configurations, resource allocation, and distributed processing techniques, we aim to create an optimized environment that accelerates the training of complex AI models. Ultimately, our goal is to drive the evolution of cloud computing by developing innovative solutions that streamline application deployment, management, and performance, while addressing the unique challenges of AI and machine learning workloads.

Cloud Computing & Applications Optimizations

Publications

- (Conference) BrokenSleep: Remote Power Timing Attack Exploiting Processor Idle States

- (Conference) Co-UP: Comprehensive Core and Uncore Power Management for Latency-Critical Workloads

- (Conference) vSPACE: Supporting Parallel Network Packet Processing in Virtualized Environments through Dynamic Core Management

- (Conference) IDIO: Network-Driven, Inbound Network Data Orchestration on Server Processors

- (Patent) Apparatus and method for interrupt control

- (Patent) Electronic device for controlling interrupt based on transmission queue and control method thereof

- (Journal) IDIO: Orchestrating Inbound Network Data on Server Processors

- (Patent) Apparatus and method for interrupt control

- (Conference) VIP: Virtual Performance-State for Efficient Power Management of Virtual Machines

- (Poster) Janus: supporting heterogeneous power management in virtualized environments

- (Journal) Virtual Snooping Coherence for Multi-Core Virtualized Systems

- (Conference) vCache: Architectural support for transparent and isolated virtual LLCs in virtualized environments

- (Journal) vCache: Providing a Transparent View of the LLC in Virtualized Environments

- (Conference) Virtual Snooping: Filtering Snoops in Virtualized Multi-cores